A small step into HA Proxmox

High availability, or HA, is a "nice to have" for homelab setups. Depending on your initial choices, it can be costly to convert an existing setup to HA configuration. In this blog post, I will talk about some lessons learned from some mistakes as well as common strategies that I have found to be the consensus of the homelab crowd on the web.

Choose wisely.

How further can you go with how much computing power?

Surprisingly, a lot further than one might think. I began experimenting with a trash can Mac Pro as my primary homelab services server. 1 TB SSD supported by 64 GB of memory at a reasonable price was quite attractive. Mac Pro is also sleek and can be part of the living room as a decorative object. However, there are some important show stoppers that are against using Mac Pro for serving the home.

Here are the specs for the Mac Pro that I bought off of ebay:

Processor: 8 core Intel Xeon

RAM Size: 64 GB

SSD Capacity: 1 TB

GPU: AMD FirePro D500

Processor Speed: 3.00 GHz

Release Year: 2013

Model: A1481

Hard Drive Capacity: 1 TB

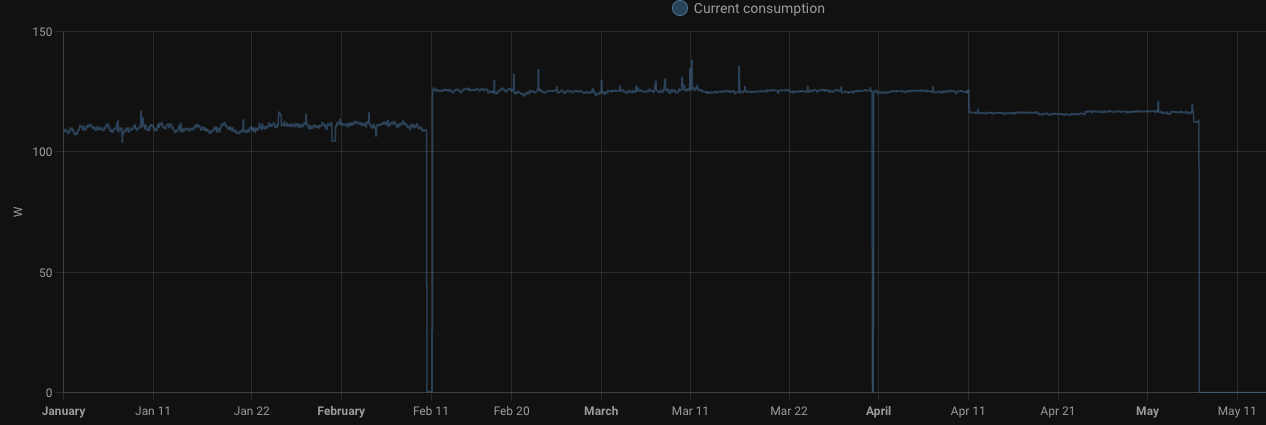

Under Proxmox, the number one issue is being unable to turn off GPUs. Based on my research, I found out that macOS natively supports turning the GPUs off on this model while they are idle so that they do not pull a lot of power while idling. Here is a quick look at the energy consumption of this model mostly under light load:

On average, it has been running around 125 kWh consistently. That is because I could not figure out how to turn off GPUs in proxmox.

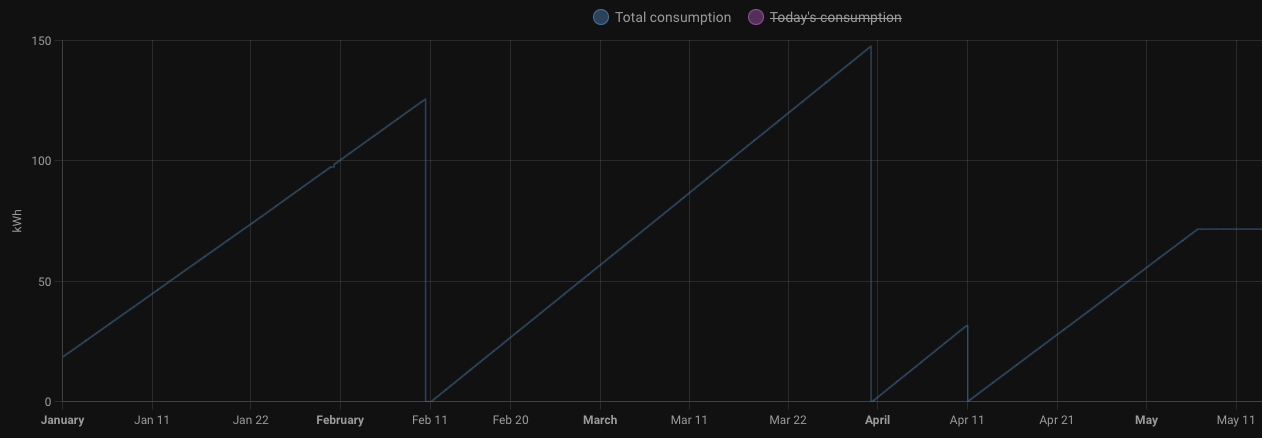

Now, let's compare the power consumption of Mac Pro shown above to a HP EliteDesk 800 G3 Mini shown below, running roughly the same workload:

As much as I wanted to use Mac Pro as a virtualization/containerization server, the cost of running it 24/7 made it unreasonable compared to the importance and computing demands of my workloads. It is not about the monetary cost, but the amount of energy it sucks is quite considerable and upsetting after seeing for how much less power a mini PC can handle similar workloads.

I later found out that HP EliteDesk 800 G3 Mini is not the greatest choice for the next step, but still a step towards the right direction.

Downtime, downtime.

What you gonna do when it comes for you?

Downtime is not something fun, even for homelab setup. I began my homelab experiments to understand and learn how containerization and orchestration work. I was quite surprised at the number of services people run in their homelab setups while I was watching subject experts on YouTube. Just what do you guys need those services for, right? Well, it adds up one by one and you get to have lots of things running all of a sudden. (I will post the services I am running in my homelab as a separate post ONCE I figure out my VLAN setup.) Suffice it to say, I have a lot of stuff that my household members rely on daily now.

I started with a single HP EliteDesk 800 G3 Mini. It had no defense against downtime. I got myself 2 more HP minis to form a proxmox cluster, but I was not aware it would require much more work.

In order to form a HA cluster, the common method is to have proxmox running in its own ext4 disk, including local storage for local VMs/CTs. You can then have an additional data disk available for zfs. That way, if I understand correctly, a shared zfs storage can be setup at the cluster level where disks from all nodes participate in.

I already had 1 TB SSDs for HP minis so I had to go with installing proxmox itself directly on zfs. Having zfs available made turning VM replications possible in the cluster. As of now, I do not know a better way (except ceph or a NAS share) to handle it with the setup I have; but here is how it works:

- 2 main VMs run on different nodes.

- Cluster has a backup job scheduled to backup all VM disks to a NAS with link aggregation enabled. (I am still on 1GbE hardware so...)

- VMs have replication jobs scheduled every 2 hours. (I started with 15 minutes but that was a bit of an overkill.)

It has been working good so far except VM migration speed during recovery is limited due to 1GbE hardware. zfs replication takes care of synchronizing the VM disks between nodes. While setting HA up in proxmox, my assumption was that proxmox HA service would keep VM disks replicated on all nodes without further setup. That was actually not the case. My VMs kept getting migrated to another node without their VM disks. It could move the machine along with its configuration pretty well only to find that it cannot boot it due to missing VM disks. At the beginning, I was on ext4 so disk replication was not even available.

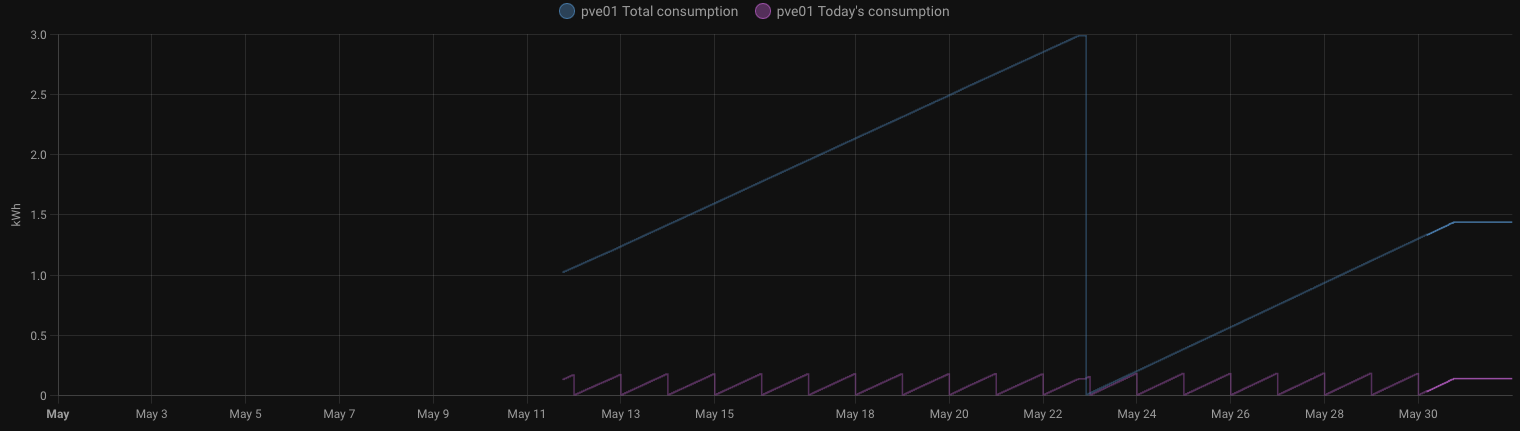

Overall, I am satisfied with the results. Initial replication of my VMs took about 5 to 8 minutes. Consecutive replications have been taking about 5 seconds each. Once the cluster detects one of the nodes is gone pufff, the total downtime is about 5 to 10 minutes due to gigabit network speeds. Unfortunately, getting HP minis to work with at least 2.5GbE is out of my reach so 10 minutes downtime will make do! I keep reading minimum requirement for a meaningful HA environment is 10GbE these days for production deployments and 2.5GbE for home enthusiasts. With 2.5GbE ports creeping into the typical consumers' household in WiFi 7 enabled routers, a network overhaul might be soon due!

I will be updating this post as I collect more information on the performance of my mini cluster.